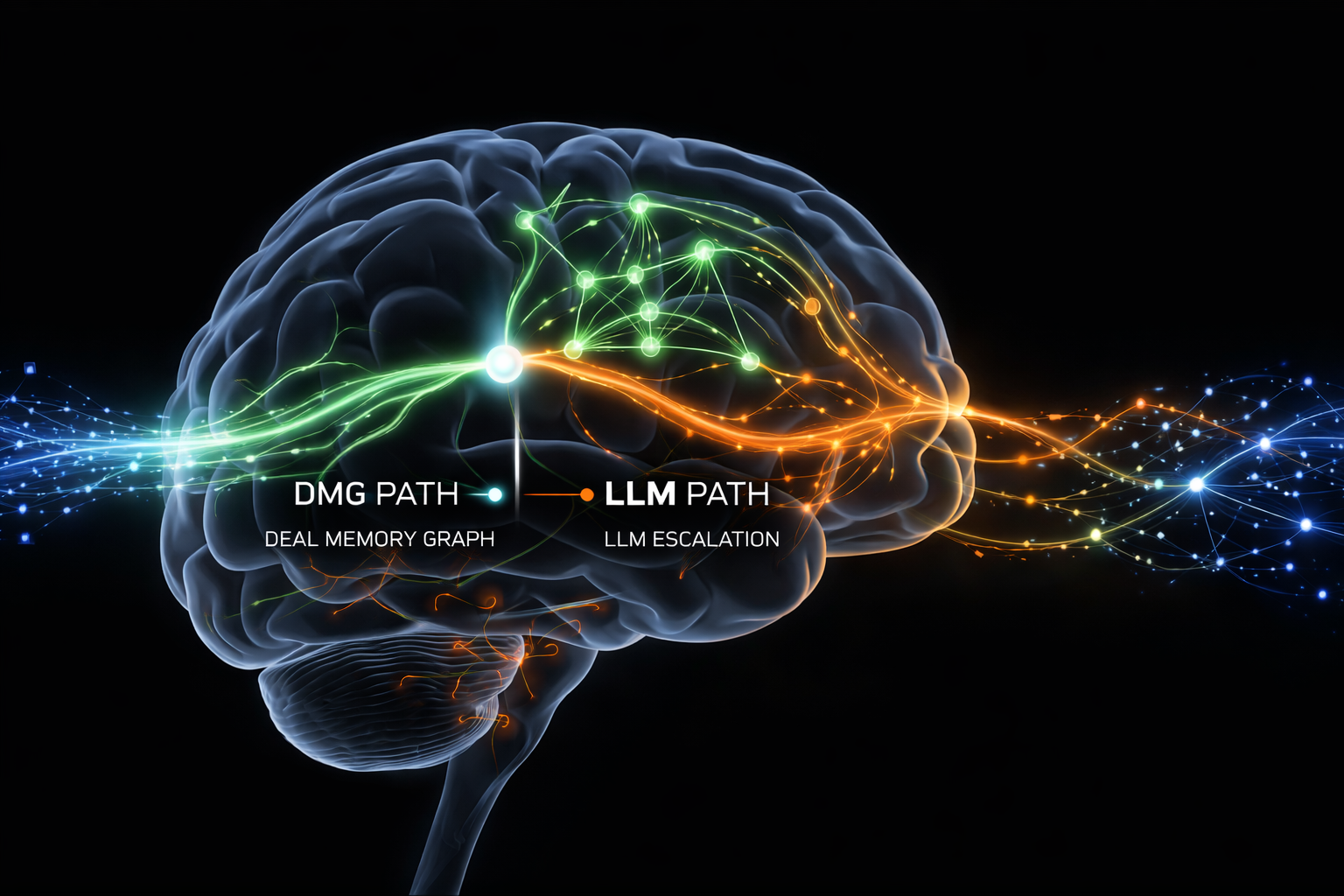

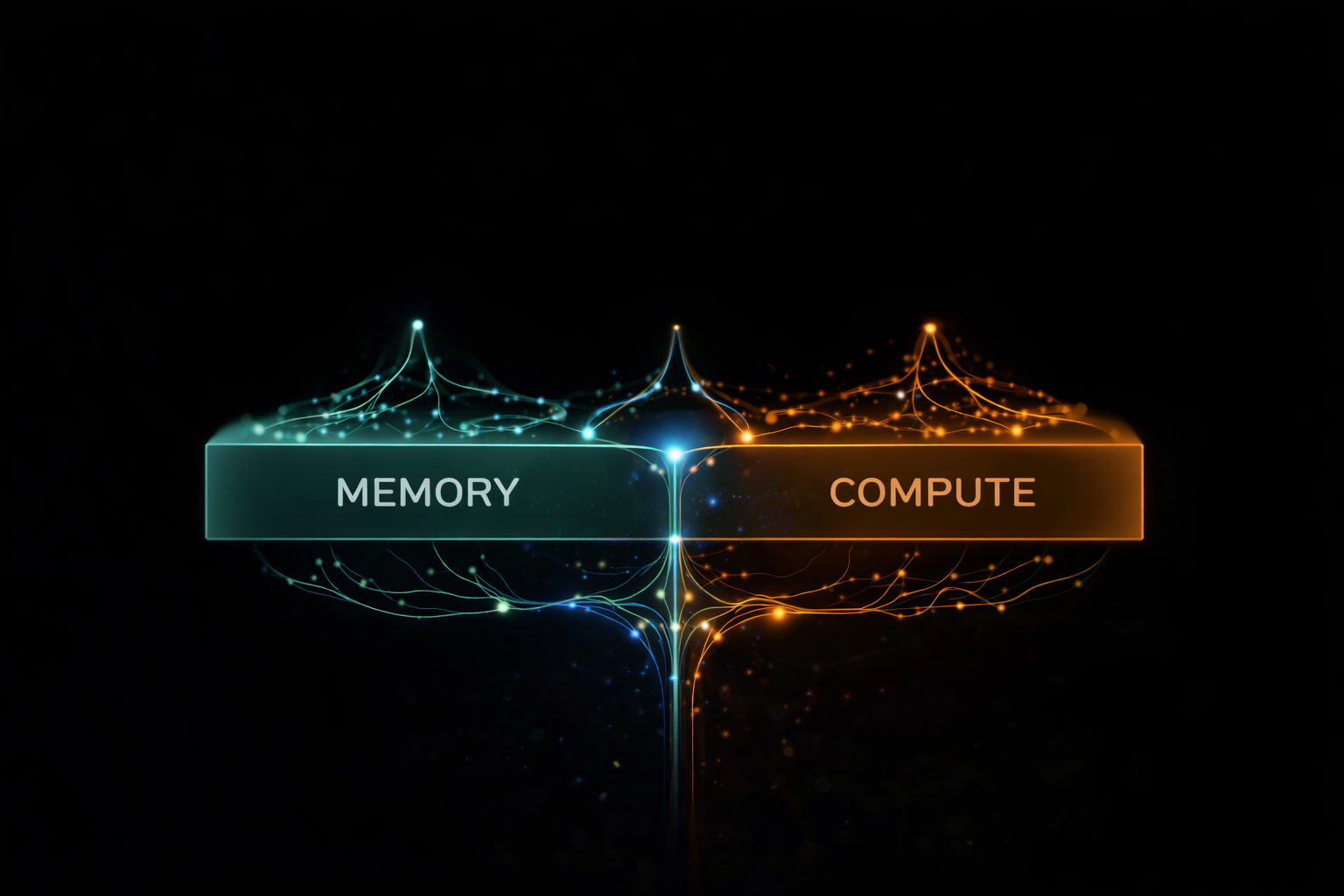

Shirley OS uses a persistent memory layer—the Deal Memory Graph™—to store context, outcomes, and confidence over time.

Instead of treating every interaction as new, the system learns from prior decisions and applies that knowledge forward.

This enables consistent, auditable decisions while reducing unnecessary compute and operational cost.